Bayesian Diffusion Models for 3D Shape Reconstruction

Abstract

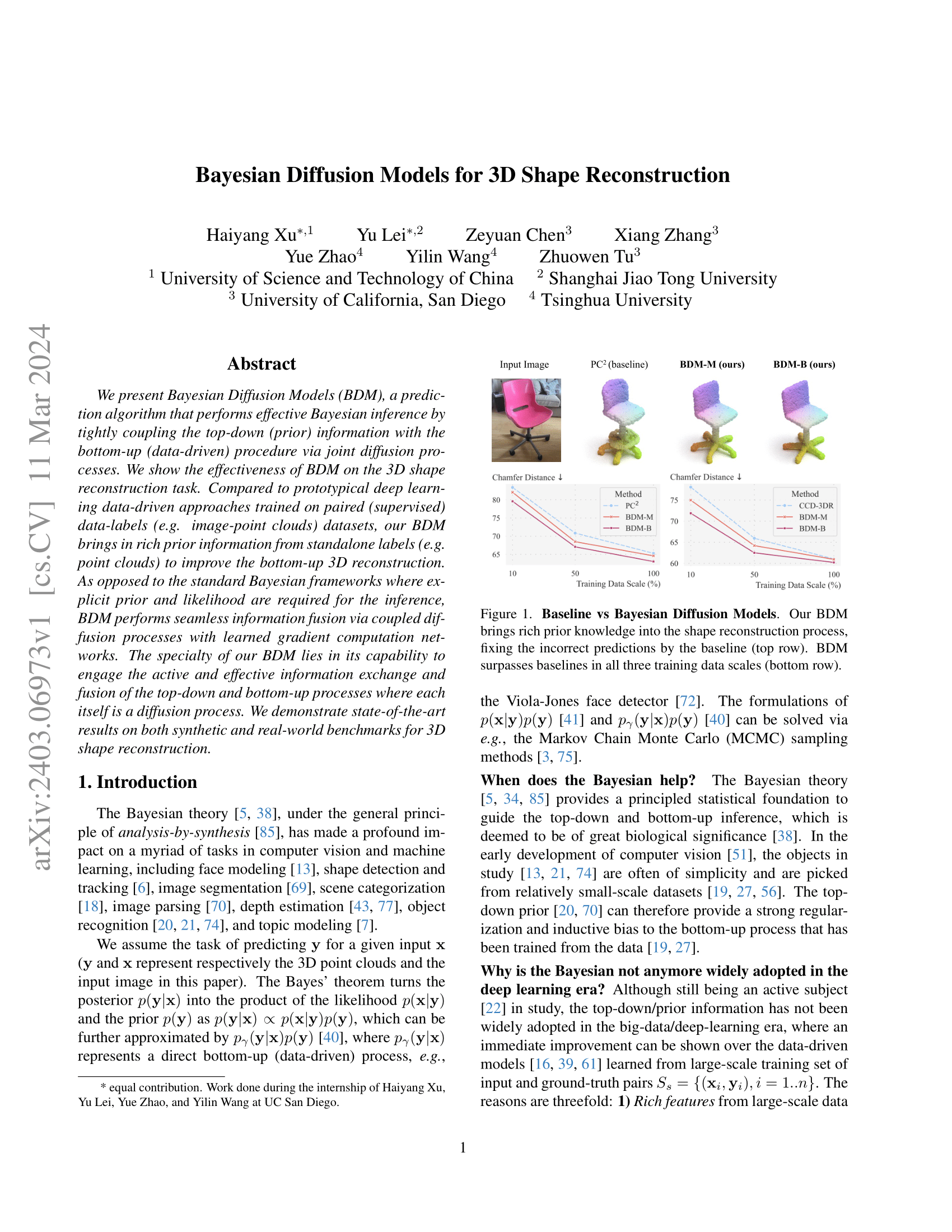

We present Bayesian Diffusion Models (BDM), a prediction algorithm that performs effective Bayesian inference by tightly coupling the top-down (prior) information with the bottom-up (data-driven) procedure via joint diffusion processes. We show the effectiveness of BDM on the 3D shape reconstruction task. Compared to prototypical deep learning data-driven approaches trained on paired (supervised) data-labels (e.g. image-point clouds) datasets, our BDM brings in rich prior information from standalone labels (e.g. point clouds) to improve the bottom-up 3D reconstruction. As opposed to the standard Bayesian frameworks where explicit prior and likelihood are required for the inference, BDM performs seamless information fusion via coupled diffusion processes with learned gradient computation networks. The specialty of our BDM lies in its capability to engage the active and effective information exchange and fusion of the top-down and bottom-up processes where each itself is a diffusion process. We demonstrate state-of-the-art results on both synthetic and real-world benchmarks for 3D shape reconstruction.

Method

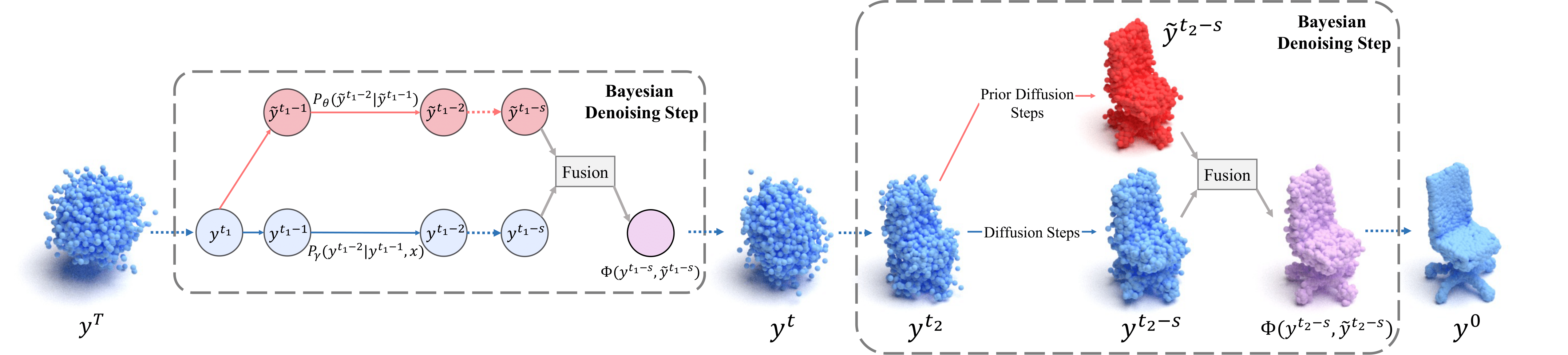

Overview of the generative process in our Bayesian Diffusion Model. In each Bayesian denoising step, the prior diffusion model fuses with the reconstruction process, bringing rich prior knowledge and improving the quality of the reconstructed point cloud. We illustrate our Bayesian denoising step in two ways, left in the form of a flowchart and right in the form of point clouds.

-

Blue Step:

Reconstructive Denoising Step P(yt-1 | yt, x)

- The normal diffusion-based 3D reconstruction model.

- Given the pointcloud yt at timestep t and the image x, get the previous step result yt-1.

-

Red Step:

Generative Denoising Step P(yt-1 | yt)

- The diffusion-based 3D generative model.

- Given the pointcloud yt at timestep t, get the previous step result yt-1.

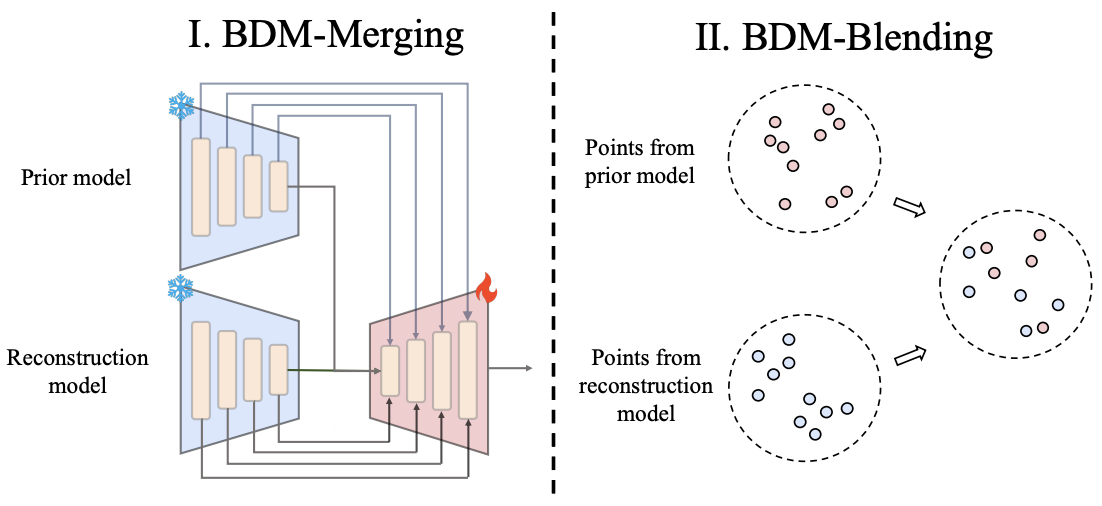

Illustration of our proposed fusion methods: BDM-Merging and BDM-Blending. The left part is the BDM-Merging, while the right side shows the blending module.

Experiments

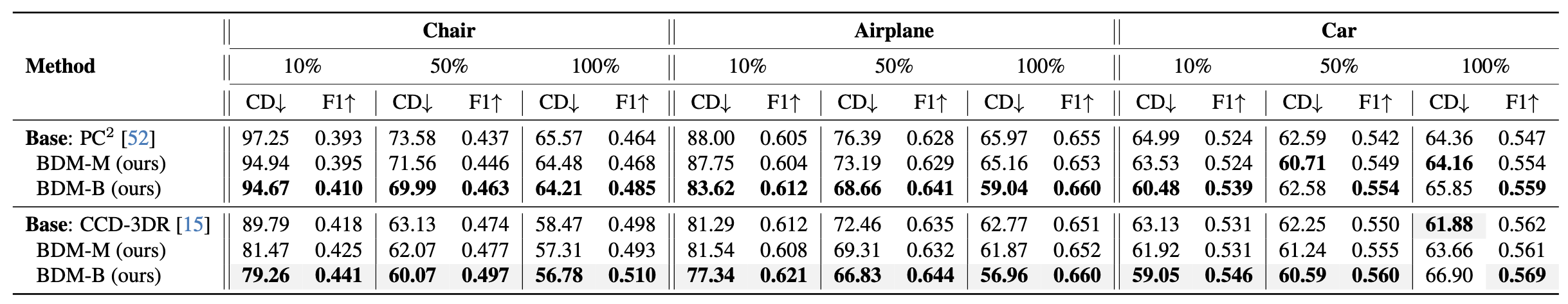

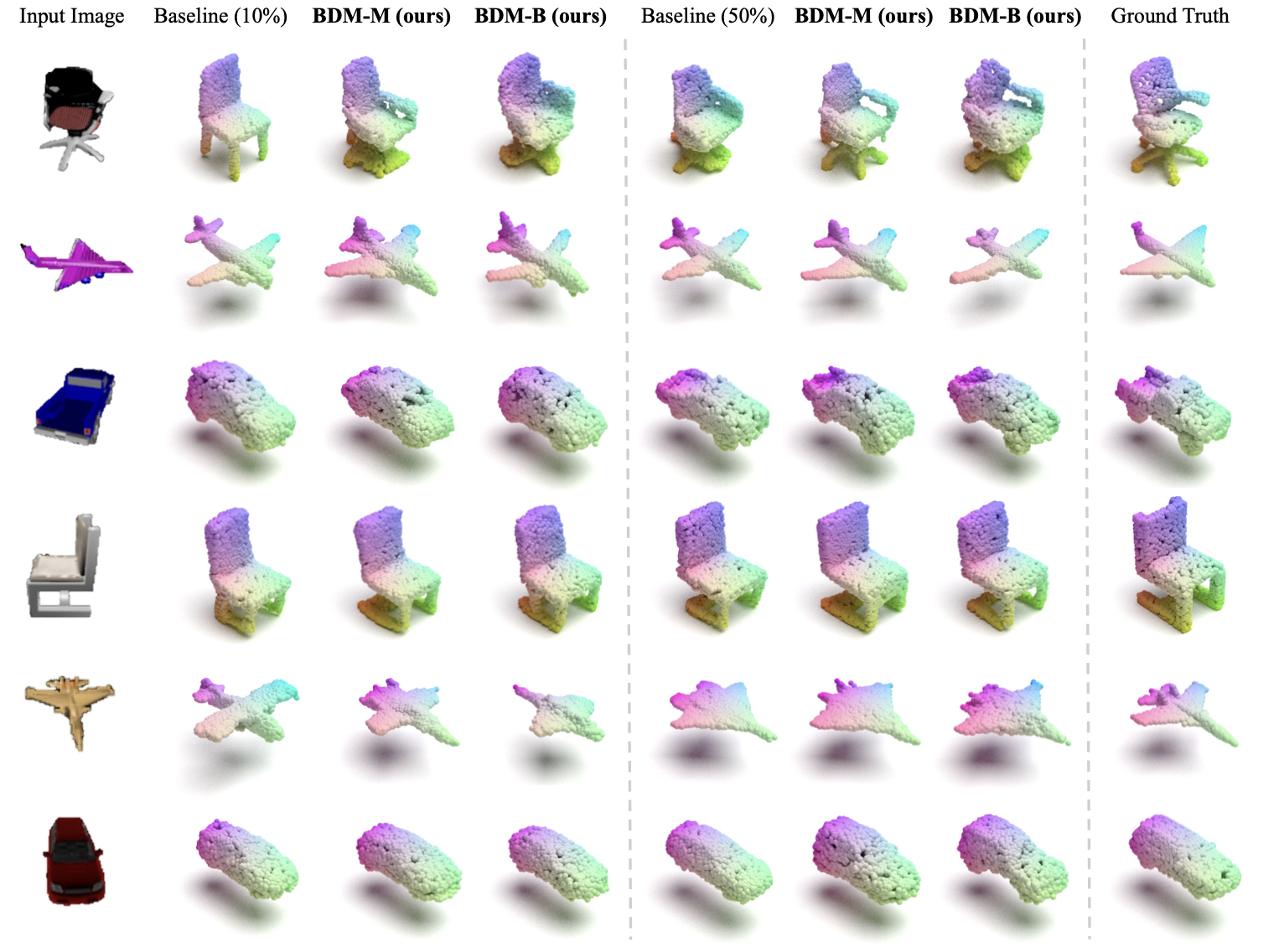

Quantitative results on the ShapeNet-R2N2 dataset. For each existing SOTA diffusion-based reconstruction model, we compare our two methods with it. We run our experiments on three categories: chair, airplane and car. In each category, we test under three different cases, varying the training data scale of reconstruction model. x% means that we only use x% data of a certain category in ShapeNet-R2N2 to train reconstruction model.

Qualitative comparisons on the ShapeNet-R2N2 dataset.

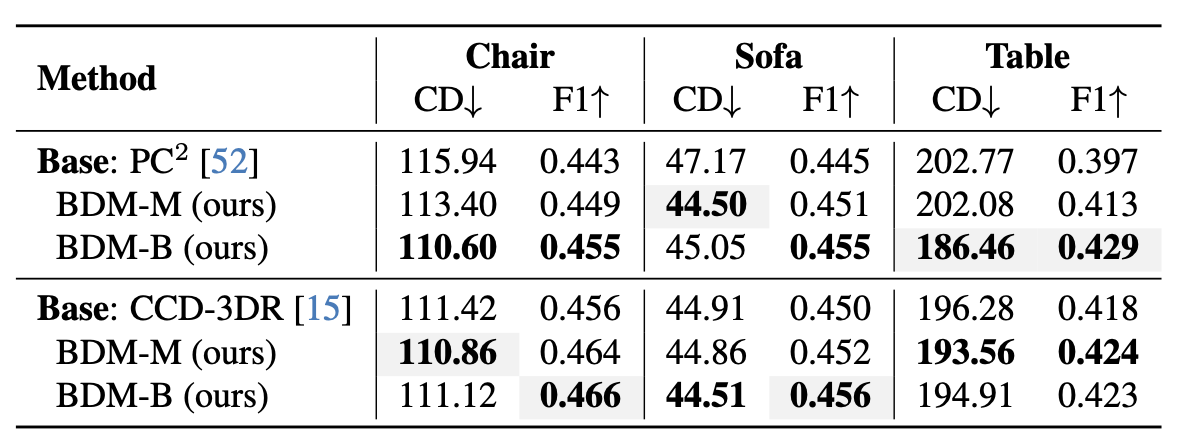

Quantitative results on the Pix3D dataset.

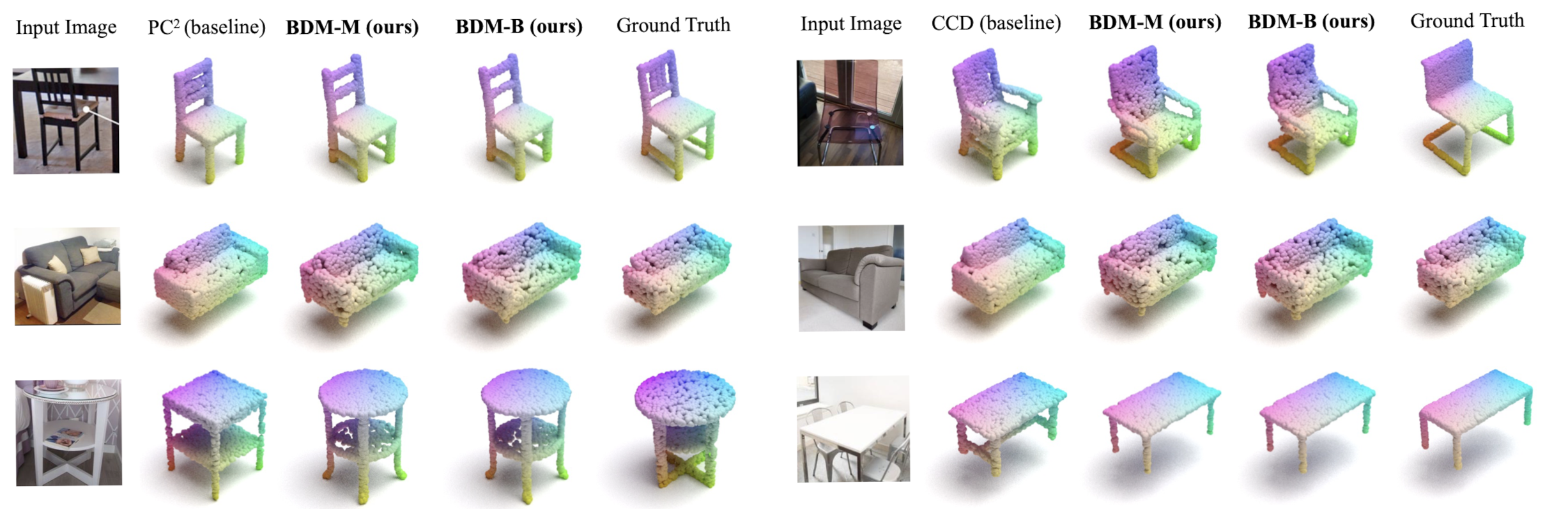

Qualitative comparisons on the Pix3D dataset.

Citation

@misc{xu2024bayesian,

title={Bayesian Diffusion Models for 3D Shape Reconstruction},

author={Haiyang Xu and Yu Lei and Zeyuan Chen and Xiang Zhang and Yue Zhao and Yilin Wang and Zhuowen Tu},

year={2024},

eprint={2403.06973},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Paper

Bayesian Diffusion Models for 3D Shape Reconstruction

Haiyang Xu*, Yu Lei*, Zeyuan Chen, Xiang Zhang, Yue Zhao, Yilin Wang, Zhuowen Tu